Russia AI #1: But how good is GigaChat 2 on medicine, seed science and shrimp?

This series is about AI in Russia and the Russian-speaking world, and its effects elsewhere. I will get better as I go. :) Поехали.

In Import AI 417 Jack Clark writes about the reported performance of GigaChat 2 Max, the strongest Russian LLM, created by Sberbank, the largest bank in Russia. I quote midway through:

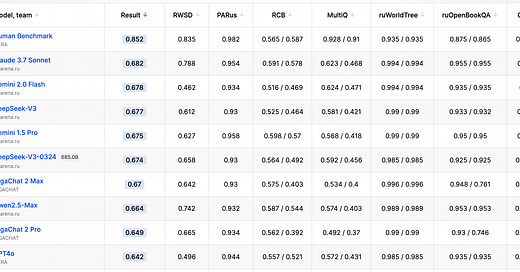

The greatest signal may be in the Russian language benchmark: The authors test out the models on the MERA benchmark, which is a leaderboard for testing out models on Russian-specific tasks. I think I believe these scores? GigaChat 2 Max (the large-scale closed-weight model) comes in at an overall score of 0.67, coming in sixth place behind models like Claude 3.7 Sonnet, DeepSeek, and Gemini 1.5 Pro. This makes some amount of intuitive sense – all those models are way better than the scores described in this paper, so the ranking here makes sense.

MERA is set up by AI Alliance Russia, a mix of Russia’s big tech, oil/gas and telco companies, under government supervision. This is the MERA leaderboard; here’s the top 10 at the time of writing, out of 92 entries:

(Don’t be so surprised by the Human Benchmark score. I was proud of myself on that exam day.)

Take a look at MERA’s Industrial section. This is new, launched last month. There are three leaderboards, on agronomy, aquaculture and medicine. MERA:

There are currently three tasks posted on the site, two of which are on agriculture and one on medicine:

ruTXTAgroBench: a dataset designed to measure the professional knowledge of the model acquired during pre-training in the field of agronomy. Consists of 2935 original questions on agronomy, covering botany, forage production and grassland farming, melioration agriculture, general genetics, general agriculture, basics of selection, plant growing, seed production and seed science, farming systems in various agricultural landscapes, and crop cultivation technologies.

ruTXTAquaBench: a dataset designed to measure the professional knowledge of the model acquired during pre-training in the field of aquaculture. Consists of 1102 tasks on aquaculture, including industrial aquaculture, feeding of fish and aquatic organisms, mariculture (e.g. breeding crayfish, shrimp, pearl farming), and ichthyopathology (veterinary science, prevention and optimization of fish farming technologies).

ruTXTMedQFundamental: a dataset covering 17 fundamental medical disciplines from cell biology to clinical practices (surgery, therapy, laboratory diagnostics, pharmacology). The test includes 270 questions and 30 training tasks for each discipline, which allows you to compare the level of knowledge of the models with the level of a medical university graduate.

The datasets are completely original and compiled in Russian.

These have six entries per leaderboard. Here’s the medicine one:

Claude, Gemini and DeepSeek, all of which beat GigaChat on general MERA, are not part of these yet.

GPT-4.1 is not on general MERA, and beats GigaChat 2 Max on all three industrial leaderboards. GigaChat 2 Max is in second place on all three.